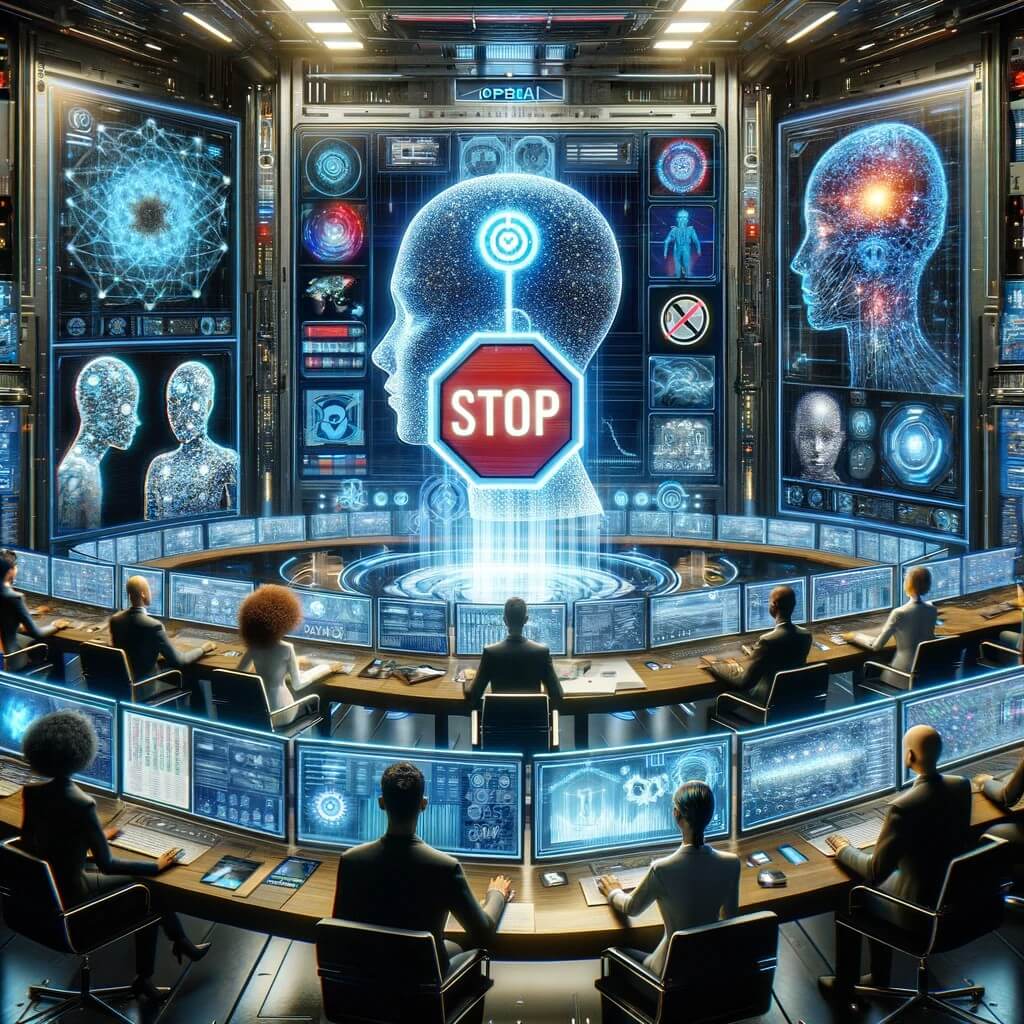

In a move, OpenAI has unveiled a new safety initiative that endows its board of directors with the authority to override Chief Executive Sam Altman if they perceive the risks associated with AI development to be too high. Termed the Preparedness Framework, this comprehensive strategy aims to address the existing gaps in studying frontier AI risks and systematize safety protocols. The framework delineates OpenAI’s processes for monitoring, evaluating, forecasting, and safeguarding against catastrophic risks posed by increasingly powerful AI models.

Framework for high-risk AI developments

Under this bold initiative, OpenAI will establish specialized teams to oversee various facets of AI development risks. The safety systems teams will focus on potential abuses and risks related to existing AI models like ChatGPT. Simultaneously, the Preparedness Team will scrutinize frontier models, while a dedicated superalignment team will closely monitor the development of superintelligent models. All these teams will operate under the purview of the board of directors.

As OpenAI propels towards the creation of AI that surpasses human intelligence, the company emphasizes the need to anticipate future challenges. New models will be rigorously tested, being pushed “to their limits,” and subsequently evaluated across four risk categories: cybersecurity, persuasion (lies and disinformation), model autonomy (autonomous decision-making), and CBRN (chemical, biological, radiological, and nuclear threats). Each section will be assigned a risk score—low, medium, high, or critical—followed by a post-mitigation score. Deployment will proceed if the risk is medium or below, continue with precautions if it’s high, and halt if deemed critical. OpenAI also commits to accountability measures, bringing in independent third parties for audits in case of issues.

OpenAI’s approach to AI safety and the implications ahead

OpenAI places a paramount emphasis on fostering extensive collaboration, not only externally but also within the intricate web of its internal teams, notably including Safety Systems. This collaborative synergy is actively channeled towards vigilant monitoring and mitigation efforts aimed at real-world misuse. Expanding the breadth of this collaborative ethos, OpenAI seamlessly extends its cooperative endeavors into the realm of Superalignment, where the laser focus is strategically directed towards the meticulous identification and subsequent addressing of emergent risks associated with misalignment.

In its unwavering commitment to pushing the boundaries of knowledge, OpenAI stands as a trailblazer in pioneering new frontiers of research. A particular focal point within this relentless pursuit of innovation lies in the intricate exploration of the nuanced dynamics governing the evolution of risks in tandem with the exponential scaling of models. The overarching objective of this scholarly exploration is nothing short of ambitious—to prognosticate risks well in advance. This ambitious goal is underpinned by a strategic leveraging of insights gleaned from the triumphs of yesteryears, notably those grounded in the adherence to scaling laws.

As OpenAI takes a monumental step towards AI safety, questions linger about the implications of allowing a board to veto the CEO. Will this decision strike the right balance between innovation and caution? How effective will the Preparedness Framework be in foreseeing and mitigating the risks associated with developing advanced AI models? Only time will tell if this bold move positions OpenAI as a pioneer in responsible AI development or if it sparks debates on the intersection of authority and innovation in the ever-evolving field of artificial intelligence.